Istio: Noninvasive Governance of Microservices on Hybrid Cloud

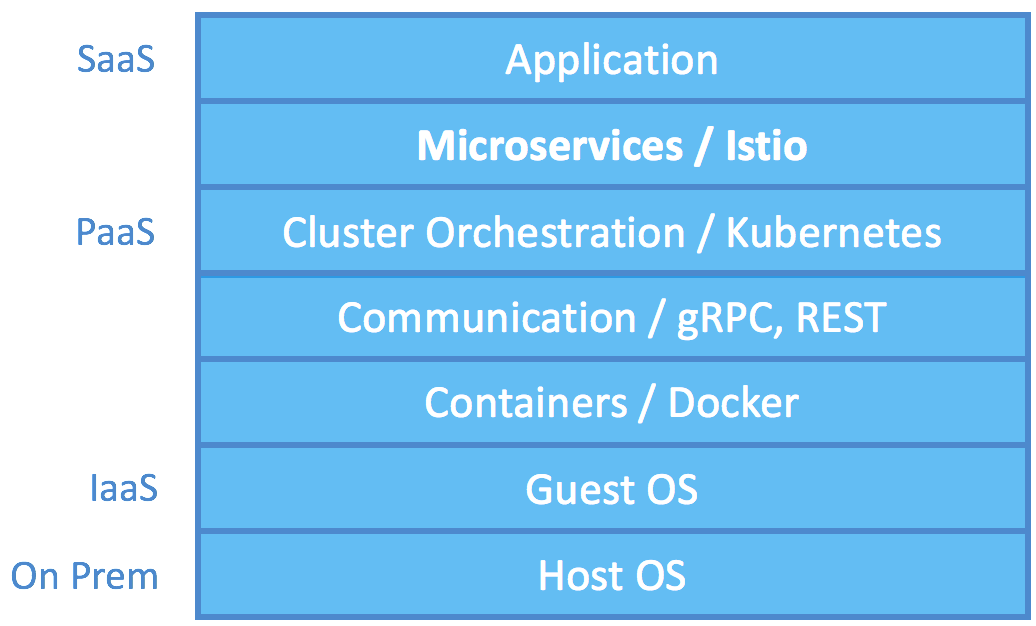

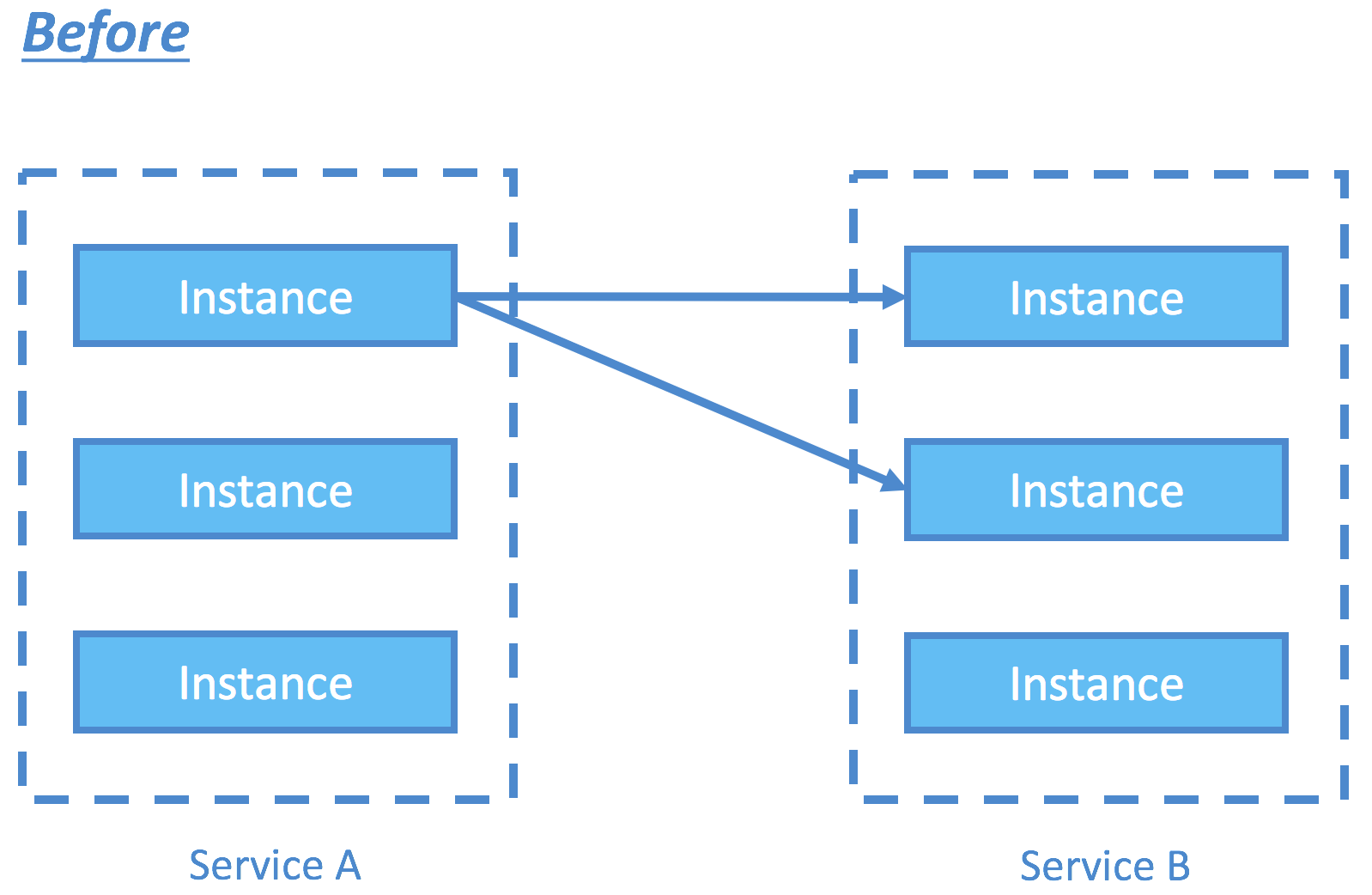

As presented in my previous post, microservices are the state-of-the-art architecture for building scalable, highly-available, manageable backend. No more 30-minute build time, single point of failure, and constant regression from crazy linking or backdoor dependency. But as one breaks off the monolithic application into microservices, new challenges surface. Procedure calls are now inter-process communication through the asynchronous network, which needs service discovery, load balancing, authentication, authorization, liveness detection, traffic monitoring, etc. Handling all these not only is a ton of work but also requires code change to your services.

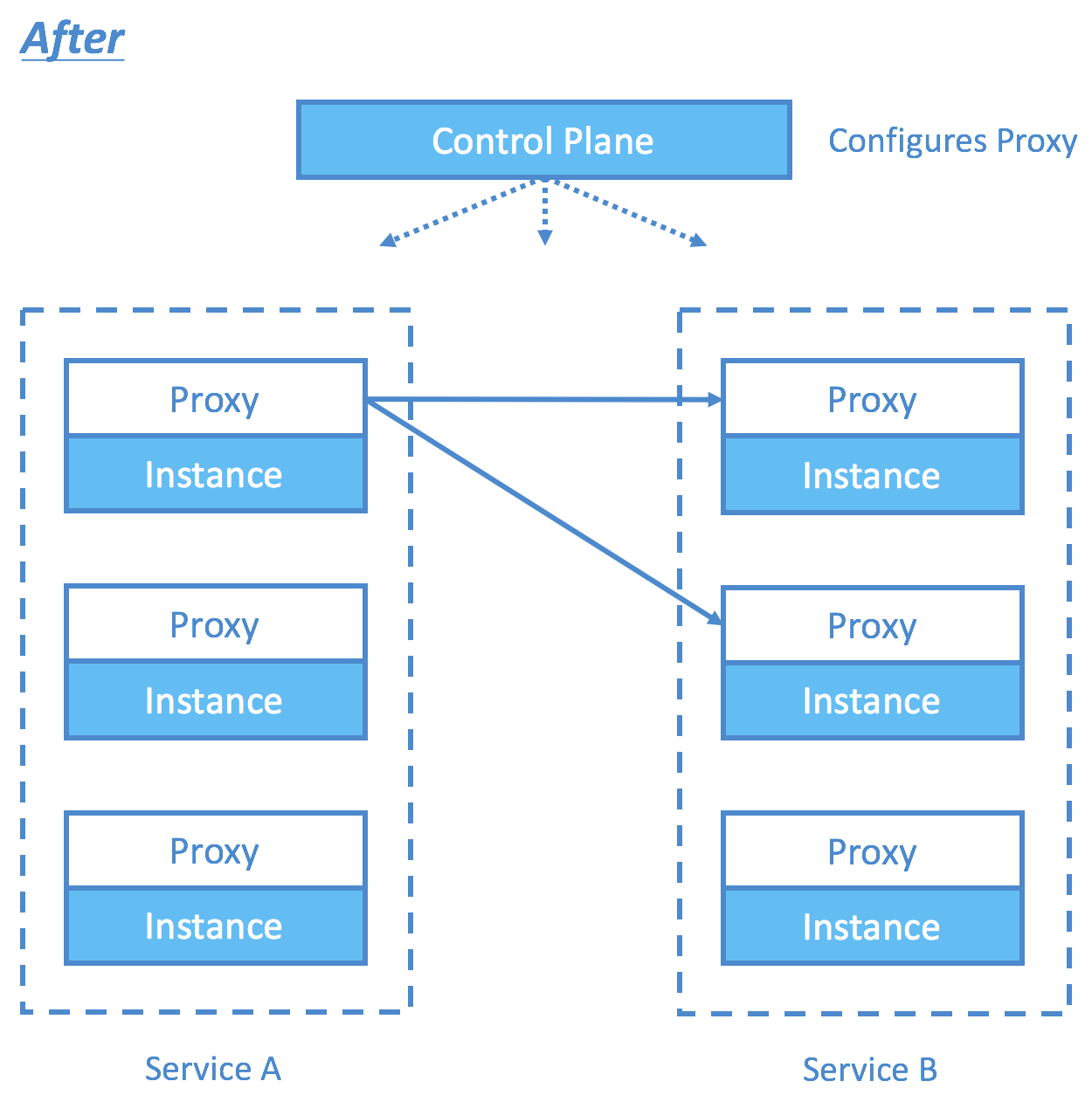

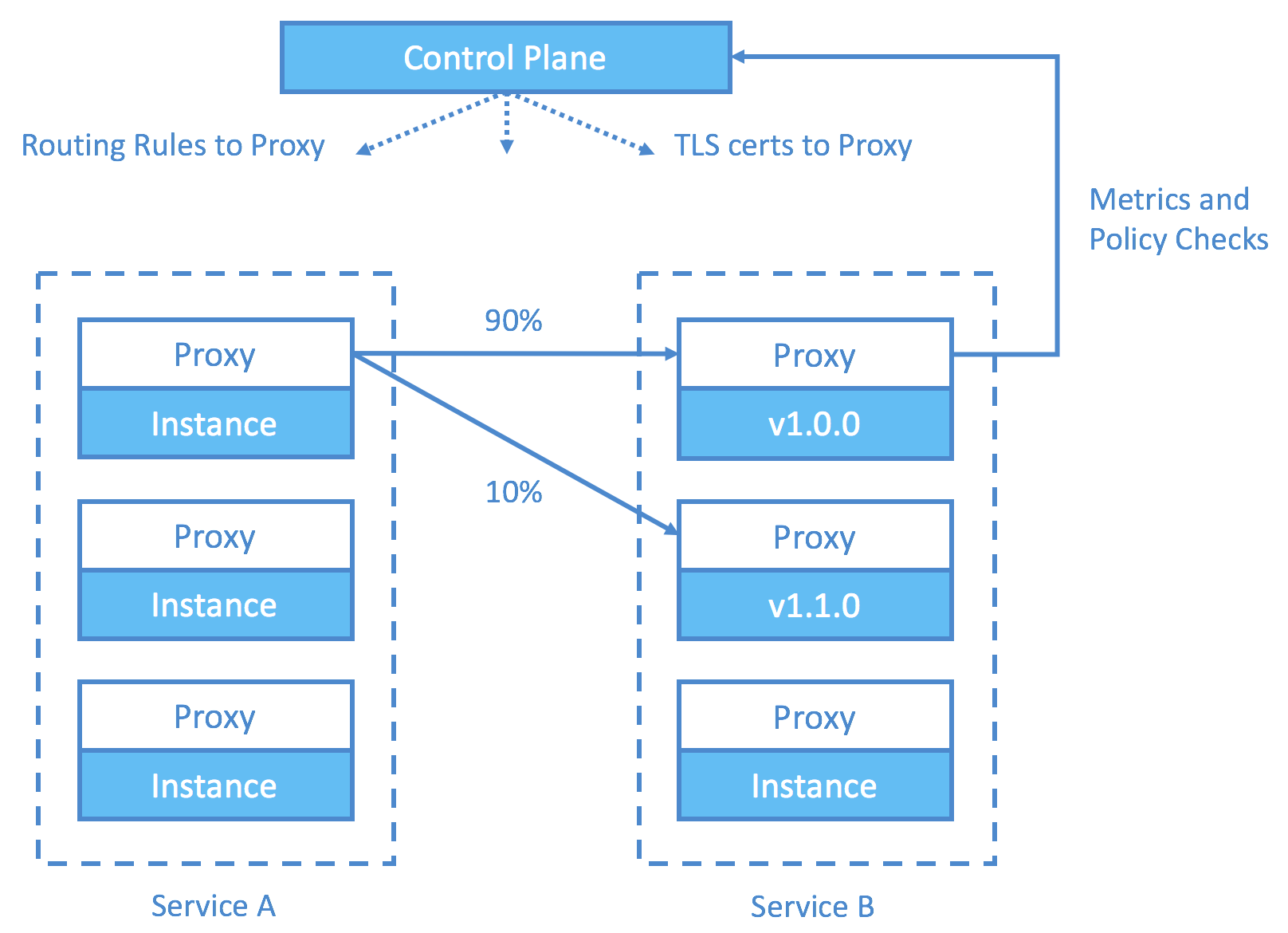

Istio addresses many of such challenges during the migration towards service mesh. The term service mesh is often used to describe the network of microservices that make up such applications and the interactions between them. The most profound innovation of Istio is perhaps the noninvasive approach to connect, manage, and secure microservices. It does so using Envoy, which is a high-performance proxy from Lyft. Istio pairs each of your containers with a proxy that intercepts and redirects all of your ingress and egress traffic. By configuring on the proxy specific routing rules, access control, and certificates through the control plane, microservices management can be easily achieved.

Take some powerful use cases for examples.

Canary release or A/B testing: configure your routing rules to redirect your downstream service requests to the instances of different versions.

Secured RPC: Receive certificates from control plane which acts as CA / root of trust. Bootstrap secure channel using recipient’s public key in certs. Exchange symmetric key through this channel. Carry on communication using the symmetric key.

Policy enforcement (access control, quota limit, etc.): Proxy queries the control plane for policies regarding the type of request it received and the client identity associated with such request. Cache the results afterward. Enforce the policy on proxy.

Telemetry: Monitor all traffic in the service mesh with data reported by proxy.

But how does Istio ensure that a proxy is paired with a container on creation? The answer is Kubernetes (k8s) pods. A pod is an atomic orchestration unit that consists of one or many containers with shared storage, network namespace, and a specification on how to run the containers. It is an atomic unit because all containers in a pod are always run together, creating a close coupling that is exactly what Istio wants for the proxy and service. But Istio is noninvasive, and the pod spec from the application does not include the pairing of proxy. Then we are back to the question of how does Istio ensure that a proxy is paired with a container on creation?

The answer lies still in k8s. Istio uses the k8s initializer, which is an extension mechanism that allows the injection of a container before the pod (or any k8s objects) is created without modifying the pod spec. Apparently, Istio injects a proxy to each container in the pod.

Back in the days when pods and k8s initializers were first introduced, people doubted that such feature is useful at all and might just be over-design. But after the debut of Istio that takes advantage of this, the visionary and insightfulness of its creators cannot be more evident.